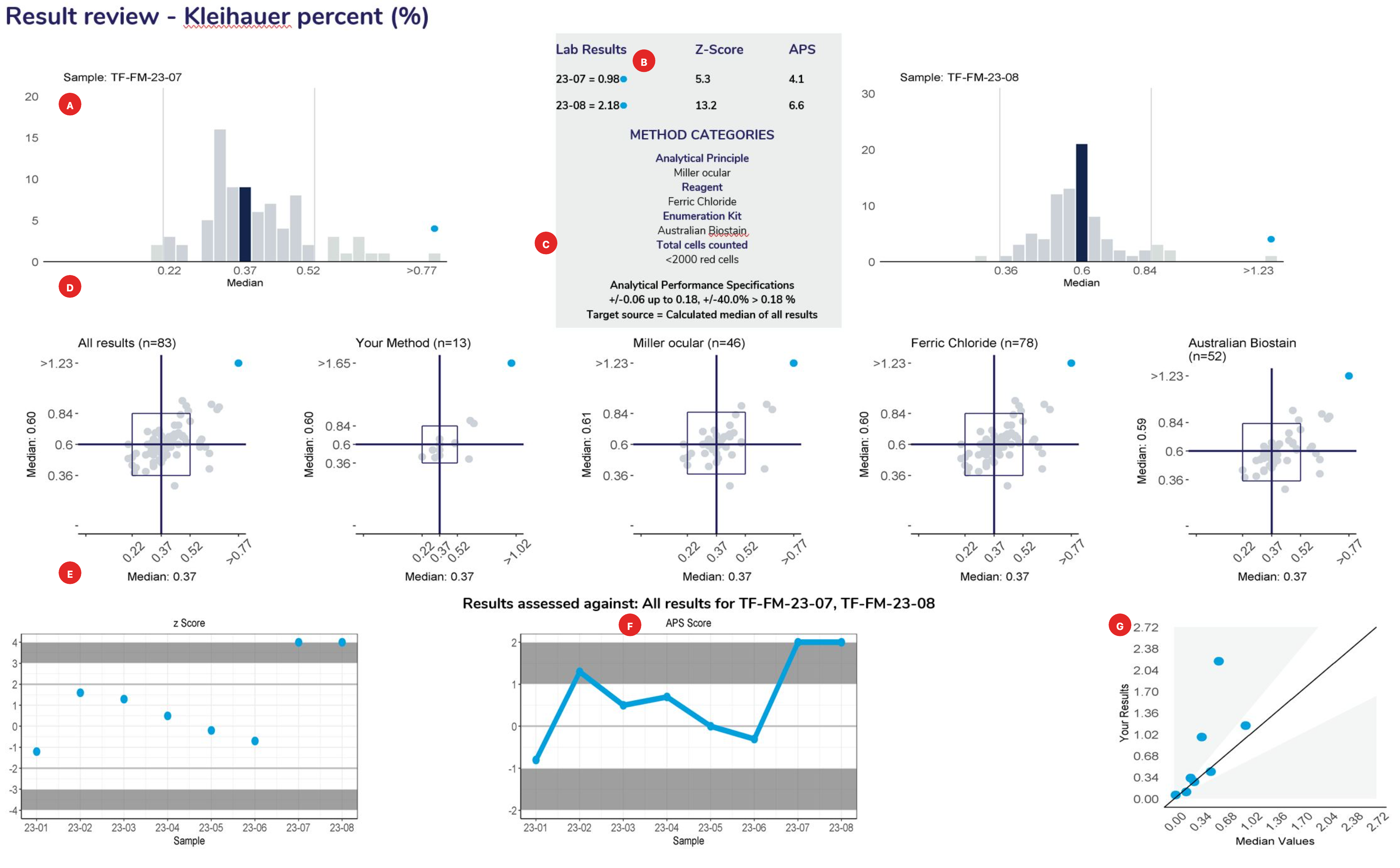

Histograms

Provide a frequency distribution of the results submitted by all participants. The blue bar represents the median or target result, the blue circle indicates the participant’s result.

The Transfusion discipline programs are structured to provide a peer group comparison of individual participant results with all results received. The survey reports provide graphical representations of participant results for all methods used for the required tests performed by participating laboratories. Participant results for quantitative analysis are compared to a calculated median or target from all method classifications for a particular test, whereas a scoring system is used to assess the performance of participants enrolled in programs that require interpretive responses. A guide to interpreting Blood Group and Antibody reports is available on the myQAP help page.

Participant results are only assessed if the results of stability testing performed on or after the close date are acceptable; if stability testing results are deemed unacceptable the survey will not be assessed and participants will be notified in writing. Participant consensus of 80% (or median response on quantitative results) is used to determine the expected survey target, a minimum of six (6) responses is required to set a consensus target.

The survey report provides a simple, direct comparison of individual participant results with all results received and graphical representations showing results from the same method and reagent groups. Participants’ results will be compared to a calculated median from all method classifications for a particular test as well as comparing results within a method or reagent group. Survey reports are issued for each measurand/test after the scheduled closing date. Analytical Performance Specifications (APS) are unique for each test. APS is calculated from the target overall median value and are used in the histograms and youden plots. The APS is based on clinical needs and are set and reviewed by program organisers and expert committee members.

The FMH program provides assessment for quantitative and qualitative measurands.

Survey objective target values are determined by the RCPAQAP Transfusion Advisory Committee. Assessment of participant performance is based on the median for % foetal cells from submitted results. The selected values are used to calculate the target volume (mL) of cord blood and the target dose of anti-D immunoglobulin recommended.

Survey Reports summarise every pair of specimens for each measurand and provide summary data on your performance throughout the cycle. Reports provide a graphical comparison of individual results with all results received and with participants using the same method categories – examples are the analytical principle, measurement system and reagent source.

Quantitative results are usually compared with the specific target value (if known), the “overall median” or the median of the main variable of the category median. Analytical Performance Specifications (APS) are set either side of the expected value. Non-numerical results (descriptive results) are compared with a target value or overall method group consensus.

The Analytical Performance Specifications (APS) are unique for each measurand, and the acceptable range for each specimen is calculated from the central value (target, median or weighed-in value). These ranges are displayed in the report histograms and Youden Plots. The comment “Low” or “High” is added if the result is outside the APS and will be highlighted for review.

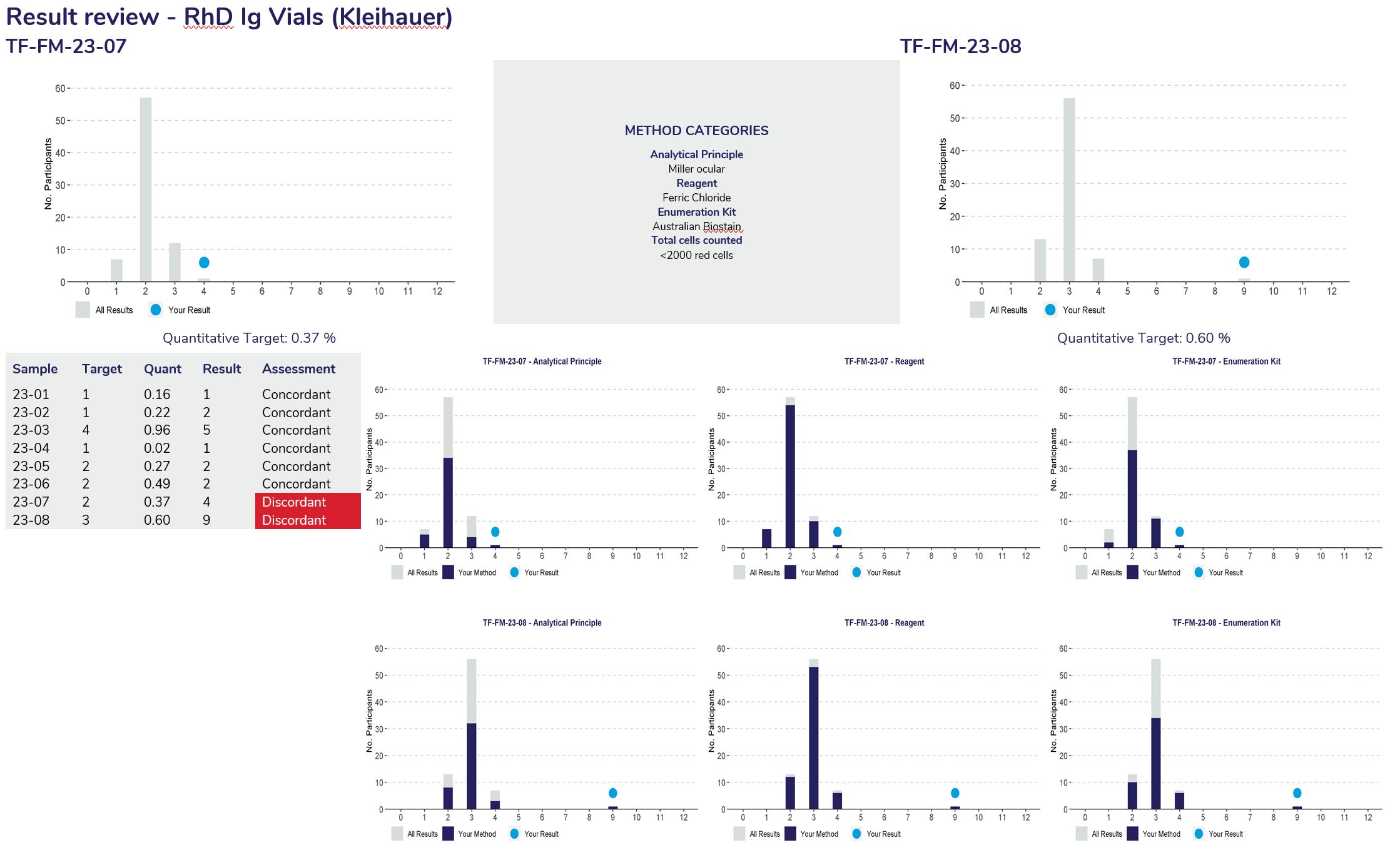

Qualitative measurands (RhD Ig Vials) display the responses received, comparing results to specific target that have been set by organisers.

Note: The z-score is provided as an additional parameter to demonstrate the number of standard deviations a participant’s result is away from the mean value. It measures performance based on what is achievable from test methods. Participants are not assessed against the z-score.

The new report format follows a standard structure for all disciplines that survey quantitative measurands with two samples in each survey challenge.

The survey reports incorporate linear regression analysis to determine the precision and accuracy of the testing procedure.

Some programs allow participants to submit results in SI or mass units. The report will default to the units submitted by the laboratory.

If survey results are not received by the close date an appropriate message is returned.

The structure of the report is as follows:

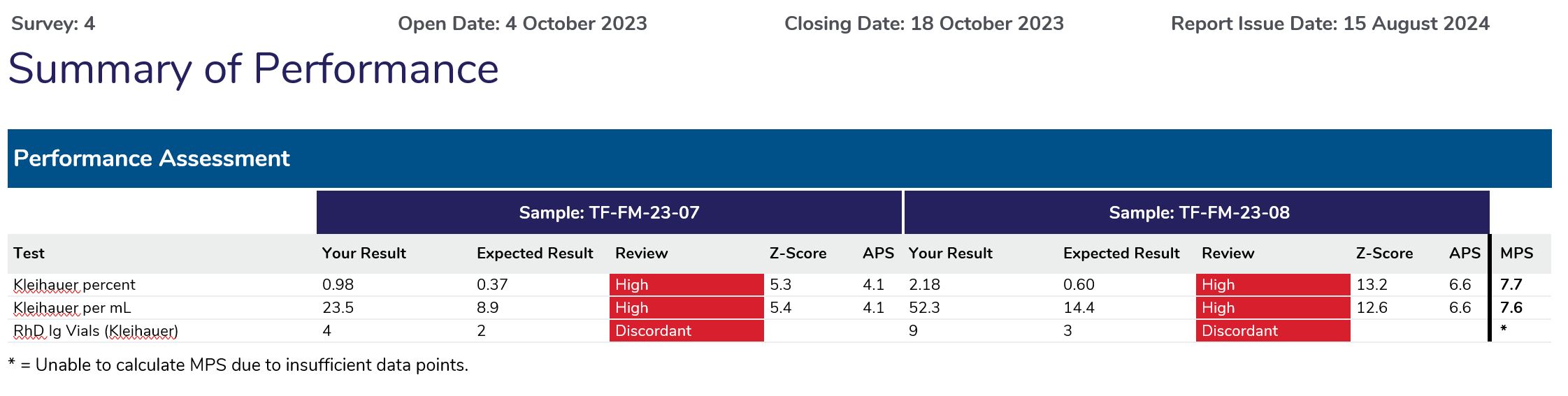

| Please review results returned for Samples(s): | TF-FM-23-07 | TF-FM-23-08 |

| Kleihauer percent | High | High |

| Kleihauer per mL | High | High |

| RhD Ig Vials (Kleihauer) | Discordant | Discordant |

The Summary of Performance provides participants with an assessment of their overall performance and indicates what measurands require further review.

APS score calculation

Measurand APS: APS +/- 0.5 up to 5.00, +/- 10.0% > 5.00 x109 cells/L (5.0 is the measurand decision point)

| Lab result above decision point Lab result = 19.30 (above decision point – 10% range) Target result: 18.40 Measurand limit: 10% of 18.40 = 1.840 APS Score = (19.30 – 18.40) / 1.840 APS Score = -0.49 |

Lab result below decision point Lab result = 2.90 (below decision point – 0.5 range) Target result: 2.60 Measurand limit: 0.5 APS Score = (2.9 – 2.6) / 0.5 APS Score = 0.6 |

Provide a frequency distribution of the results submitted by all participants. The blue bar represents the median or target result, the blue circle indicates the participant’s result.

Lists the results and method categories returned by the participant.

The APS that has been assigned to the measurand.

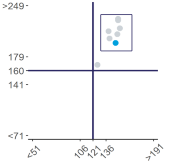

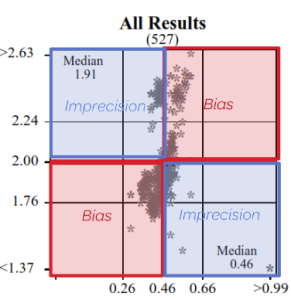

Represent a scatter of two sample results plotted against each other. The sample with the higher measurand level is on the y-axis plotted against the lower level on the x-axis. Five Youden plots are presented and illustrate from left to right, results from all results, the participant’s method, analytical principle, measurement system and the reagent. The participant’s result is highlighted by the blue dot.

Examples of Youden charts

| Biased results | Biased Laboratory |

|

|

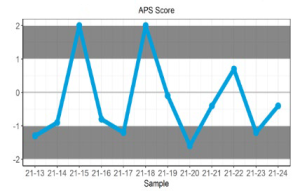

Displays the participant’s z-score for up to 6 sets of returned results and provides an indication of the precision and accuracy of results within a survey year.

Displays the APS score for up to 6 sets of returned results and provides an indication of the precision and accuracy of results within a survey year.

Examples of Plots

| Imprecision | Bias |

|

|

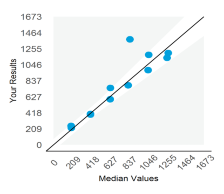

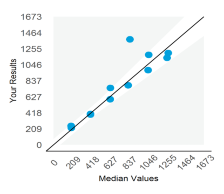

Displays the participant result against the expected result, and indicates linearity across different survey sample levels.

Examples of Plots

| Imprecision | Bias |

|

|

Measurand performance: The Measurand performance page provides participants with a breakdown of the results returned.

Provide a frequency distribution of the results submitted by all participants. The blue bar represents the median or target result, the blue circle indicates the participant’s result.

Lists the results and method categories returned by the participant.

The APS that has been assigned to the measurand.

Represent a scatter of two sample results plotted against each other. The sample with the higher measurand level is on the y-axis plotted against the lower level on the x-axis. Five Youden plots are presented and illustrate from left to right, results from all results, the participant’s method, analytical principle, measurement system and the reagent. The participant’s result is highlighted by the blue dot.

Examples of Youden charts

| Biased results | Biased Laboratory |

|

|

Displays the participant’s z-score for up to 6 sets of returned results and provides an indication of the precision and accuracy of results within a survey year.

Displays the APS score for up to 6 sets of returned results and provides an indication of the precision and accuracy of results within a survey year.

Examples of Plots

| Imprecision | Bias |

|

|

Displays the participant result against the expected result, and indicates linearity across different survey sample levels.

Examples of Plots

| Imprecision | Bias |

|

|

Precision and Accuracy

High / Low or Low / High = Imprecision

High / High or Low/Low = Bias

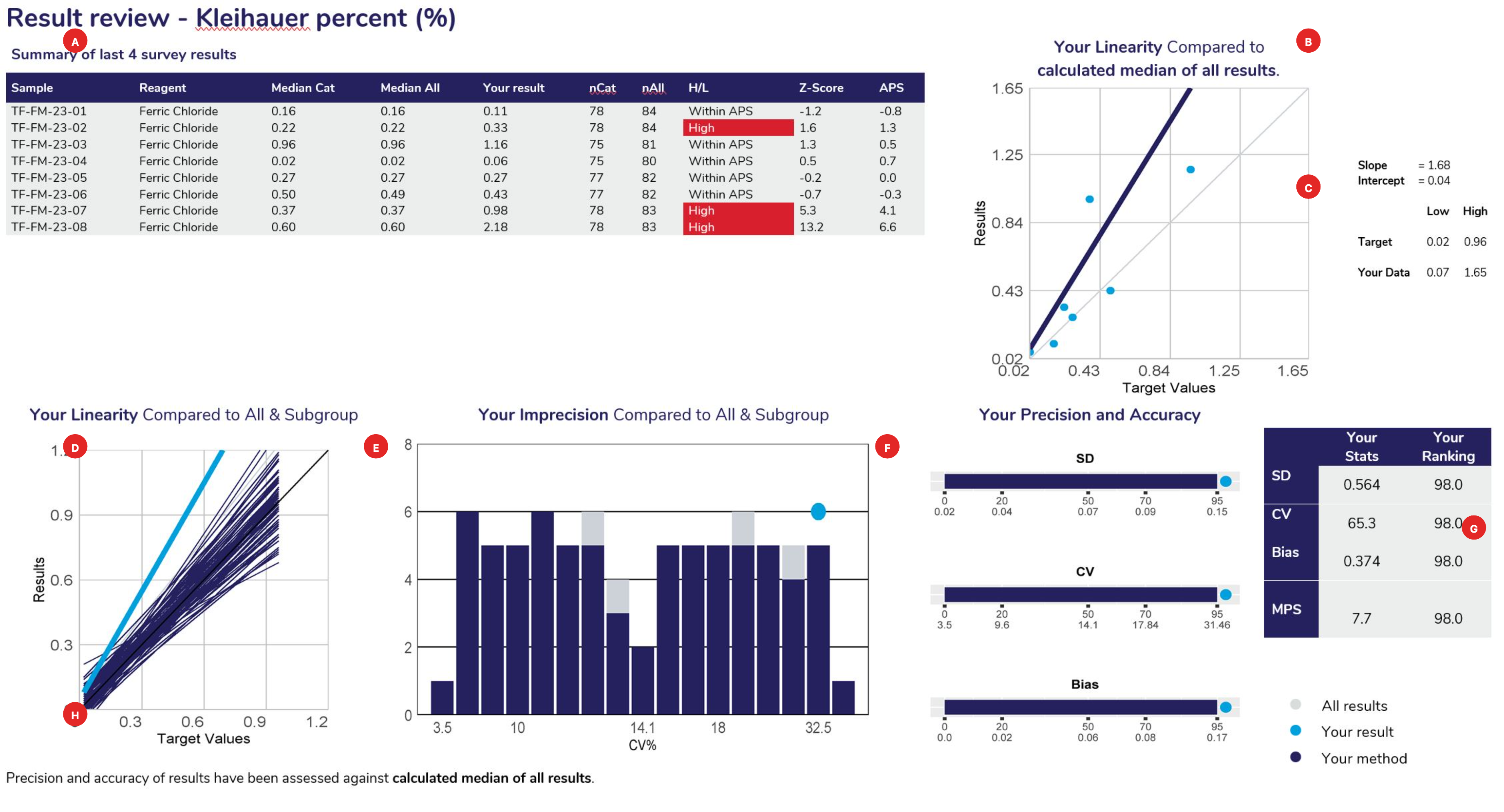

Displays the samples used to calculate linear regression analysis, the main method category value for this program (the Kleihauer percent example displaying the “reagent method – Ferric Chloride). The table columns represent the Sample ID, Measurement System category assigned for result comparison, median result for the method category, median of ALL results, participant’s result, number of results from the method category, number of results from all results submitted, High/Low review flag (W= Within APS, no review required), Z-score and the APS score.

Linear regression analysis is based against the target source of each sample, which could be the “median of all results”, “median of the assessment category” (measurement system) or the median of a “specified target”.

When performing linear regression, the following rules of assessment are applied:

Provides the slope and intercept calculated from the linear regression analysis. Also provides the target low and high level, with your corresponding low and high levels determined from the “line of best fit”.

Using the slope and intercept the values of your line of best fit are determined compared to the lowest and highest target values for the cycle.

To provide a comparison of all methods, linear regression is performed against the same data source (all results or specific target). The slopes represent the bias obtained from all laboratories, illustrating the slopes from all labs in ‘grey’, the slope from participants using the same assessment category in ‘navy blue’ and highlighting your slope in ‘light blue’.

This histogram represents the imprecision (CV%) obtained from all laboratories. It is calculated from the scatter around the target source linear regression line of best fit. The histogram illustrates all labs in ‘grey’, participants using the same assessment category in ‘navy blue’ and highlighting your CV% as a ‘light blue’ dot.

Provides participants with the SD, CV and Bias calculated from linear regression and how your results ranked against all laboratories that participated. Ranking (from 0 or best to 100, worst) is illustrated on the top row of the scale provided under the bar.

Standard Deviation: The SD is the standard error of the estimate (Sy.x) and can be regarded as the average SD across the range of concentrations analysed. SD provides a value in the units of the test. SD will tend to be high if you report high results and low if you report low results.

Coefficient of Variation: The SD divided by the mid-point of your laboratory’s range of concentrations, expressed as a percentage:

| CV%= | SD | x100 |

| (low value + high value)/2 |

| Average Bias:

Your biases at the low value, high value and mid value are determined. These are the differences between the line of expectation (45° line) and your line of best fit. |

|

The average bias is calculated as:

| Bias = | [low bias] + [mid bias] + [high bias] |

| 3 |

Provides participants with a table summarising their SD, CV, Bias, MPS and performance ranking.

Provides participants with information, such as the target source used for linear regression and lists any method category changes made across the survey sample range being analysed.

Displays the samples used to calculate linear regression analysis, the main method category value for this program (the Kleihauer percent example displaying the “reagent method – Ferric Chloride). The table columns represent the Sample ID, Measurement System category assigned for result comparison, median result for the method category, median of ALL results, participant’s result, number of results from the method category, number of results from all results submitted, High/Low review flag (W= Within APS, no review required), Z-score and the APS score.

Linear regression analysis is based against the target source of each sample, which could be the “median of all results”, “median of the assessment category” (measurement system) or the median of a “specified target”.

When performing linear regression, the following rules of assessment are applied:

Provides the slope and intercept calculated from the linear regression analysis. Also provides the target low and high level, with your corresponding low and high levels determined from the “line of best fit”.

Using the slope and intercept the values of your line of best fit are determined compared to the lowest and highest target values for the cycle.

To provide a comparison of all methods, linear regression is performed against the same data source (all results or specific target). The slopes represent the bias obtained from all laboratories, illustrating the slopes from all labs in ‘grey’, the slope from participants using the same assessment category in ‘navy blue’ and highlighting your slope in ‘light blue’.

This histogram represents the imprecision (CV%) obtained from all laboratories. It is calculated from the scatter around the target source linear regression line of best fit. The histogram illustrates all labs in ‘grey’, participants using the same assessment category in ‘navy blue’ and highlighting your CV% as a ‘light blue’ dot.

Provides participants with the SD, CV and Bias calculated from linear regression and how your results ranked against all laboratories that participated. Ranking (from 0 or best to 100, worst) is illustrated on the top row of the scale provided under the bar.

Standard Deviation: The SD is the standard error of the estimate (Sy.x) and can be regarded as the average SD across the range of concentrations analysed. SD provides a value in the units of the test. SD will tend to be high if you report high results and low if you report low results.

Coefficient of Variation: The SD divided by the mid-point of your laboratory’s range of concentrations, expressed as a percentage:

| CV%= | SD | x100 |

| (low value + high value)/2 |

| Average Bias:

Your biases at the low value, high value and mid value are determined. These are the differences between the line of expectation (45° line) and your line of best fit. |

|

The average bias is calculated as:

| Bias = | [low bias] + [mid bias] + [high bias] |

| 3 |

Provides participants with a table summarising their SD, CV, Bias, MPS and performance ranking.

Provides participants with information, such as the target source used for linear regression and lists any method category changes made across the survey sample range being analysed.

The assessment criteria are defined as measurand performance. As analytical error is due to both imprecision and bias, program organisers have defined Total Error as follows:

Total Error = 2SD + Bias

The quality of your laboratory’s performance is then determined by comparing the Total Error to the Analytical Performance Specification at the mid-point of the range of measurand concentrations for the cycle as follows:

| Measurand Performance Score (MPS) = | 2SD + Bias |

| Analytical Performance Specification |

These examples of bicarbonate analyses may assist in understanding this method of assessment.

Example – Laboratory 1

| SD = 0.8 mmol/L | Bias = 0.5 mmol/L |

| Total Error = (2 × 0.8) + 0.5 = 2.1 mmol/L | |

| Measurand Performance = | 2.1 | = 0.84 |

| 2.5 |

Note: When the Total Error is less than the Analytical Performance Specification then the Measurand Performance will be less than 1.0. This is the desired level of performance.

Example – Laboratory 2

| SD = 1.5 mmol/L | Bias = 0.1 mmol/L |

| Total Error = (2 × 1.5) + 0.1 = 3.1 mmol/L | |

| Measurand Performance = | 3.1 | = 1.24 |

| 2.5 |

An undesirable result – due predominantly to imprecision.

Example – Laboratory 3

| SD = 0.5 mmol/L | Bias = 2.0 mmol/L |

| Total Error = (2 × 0.5) + 2.0 = 3.0 mmol/L | |

| Measurand Performance = | 3.0 | = 1.20 |

| 2.5 |

An undesirable result – due predominantly to bias.

The method comparison provides a breakdown of all the methods (assessment category) used by participants and lists the statistics calculated for the latest survey results obtained (left) as well as the Precision and Accuracy results calculated from the linear regression analysis on the sample range used, providing the median values for each method listed, facilitating peer group comparison. The full set of statistics is seen only when there are four or more values in the dataset – three values in the dataset will illustrate the median value only and method categories with two or less users do not present any statistical data.

The “Survey Report Interpretation” flowchart can be found on the RCPAQAP website under “Resources” or can be accessed by clicking this link.

Programs that survey qualitative measurands will also provide a simple, direct comparison of your qualitative results with all results received and with participants using the same method system. Results are compared to a known target and assessed using standardised terms of assessment, “Concordant” or “Discordant”.

Provide a frequency distribution of the results submitted by all participants. The blue circle indicates the participant’s result.

Provides a breakdown of the method category selection provided by the participant.

Provides a summary of performance of all survey samples to date, listing the target result, results from the participant and the assessment provided.

Provides participants with a breakdown of Sample one’s result frequency based on method category, displaying the first three categories.

The grey bars represent the result distribution from all participants, the dark blue bars represent the participants method based on the category and the blue circle represents the participants result.

Provides participants with a breakdown of Sample two’s result frequency based on method category, displaying the first three categories.

Provide a frequency distribution of the results submitted by all participants. The blue circle indicates the participant’s result.

Provides a breakdown of the method category selection provided by the participant.

Provides a summary of performance of all survey samples to date, listing the target result, results from the participant and the assessment provided.

Provides participants with a breakdown of Sample one’s result frequency based on method category, displaying the first three categories.

The grey bars represent the result distribution from all participants, the dark blue bars represent the participants method based on the category and the blue circle represents the participants result.

Provides participants with a breakdown of Sample two’s result frequency based on method category, displaying the first three categories.

The General and Basic Compatibility program targets and acceptable responses are determined by consensus. The final acceptable responses are at the discretion of the RCPAQAP Transfusion Advisory Committee. Each response is allocated a score which is shown on the report as well as a pointer indicating your result.

Performance assessment is based on a numerical scoring system and the ‘performance summary’ of the report indicates the number of points accrued by the laboratory compared to the target score. The scoring system for the general and basic compatibility programs has a maximum number of possible points and participants will lose points for errors. The report follows the standardised reporting structure, which provides the Performance summary, result review, Method comparison (if applicable), Commentary and Cumulative summary of the year’s performance.

The General Compatibility and Basic Compatibility programs are designed for prompt identification of areas requiring corrective action. Due to the complexity of the assessment process, delays to the survey report release date may be experienced. Therefore, in 2023 we plan to distribute a preliminary report one week after the closing date of each survey. This report is intended as a guide for those laboratories who wish to troubleshoot their submitted results compared to the expected results while their remaining samples are still viable.

The tables below show the numeric scoring and performance assessment systems:

|

General & Basic Compatibility Program – Performance Assessment System |

||

| Survey Component | Points per Component (if applicable) | Total Points |

| Patient Identification | 2 points per assessed result | 8 points |

| Patient Blood Group* | 2 points per mandatory reaction | 22 points |

| 10 points for blood group* | ||

| Patient DAT | 10 points | |

| Patient Antibody Screen* | 10 points | |

| Patient Antibody Identification | 10 points | |

| Phenotyping | 10 points per assessed result | Variable |

| Donor Grouping* | 2 point per mandatory reaction | 18 points per donor unit |

| 10 points for blood group* | ||

| Compatibility | ||

| – Crossmatch Reaction* | 10 points per donor unit | 10 points per donor unit |

| – Transfuse Unit? | 10 points per donor unit | 10 points per donor unit |

*Indicates the critical areas of the Transfusion QAP surveys.

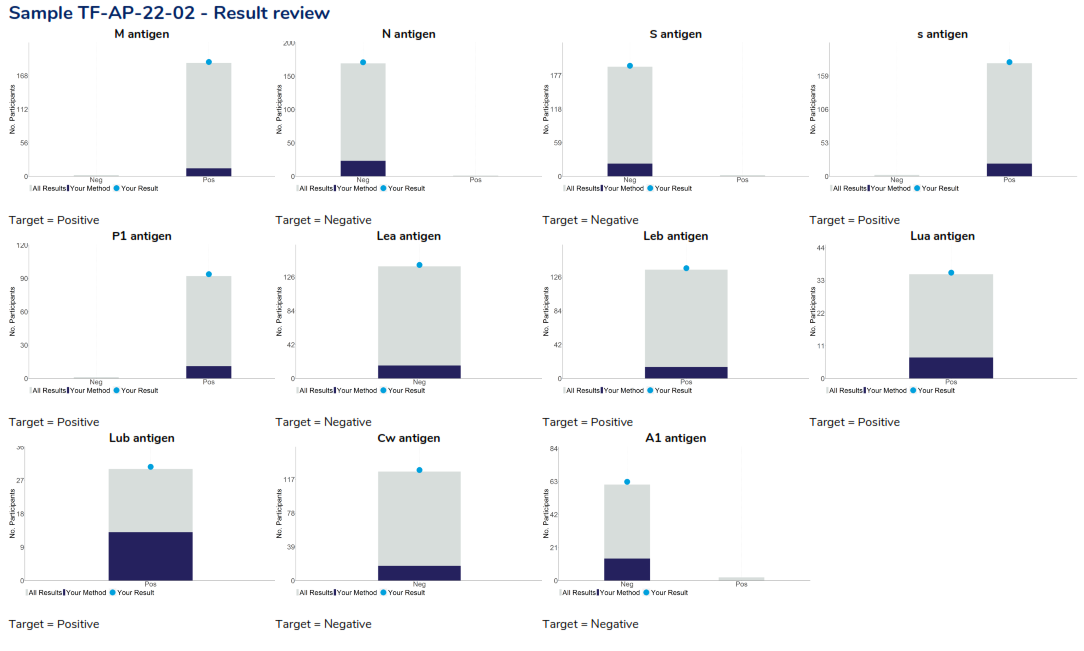

Participants provide results for antigens with the method system used. New participants and current participants introducing new antigen-testing profiles in their system must ensure the method classification is updated when entering survey results.

The Phenotyping module target and acceptable responses are determined by consensus (80% consensus must be reached to assess survey results). The final acceptable responses are at the discretion of the RCPAQAP Transfusion Advisory Committee.

The report provides participants with their performance assessment, a review of the performance for all antigens and the cumulative assessment. An example of the result review page is provided below.

Phenoytping program – Result review: Provides a breakdown of all participant responses returned , highlight the participant’s response as a light blue dot, other laboratories using the participant’s method highlighted in dark blue and the target response for each antigen listed below the respective histogram.

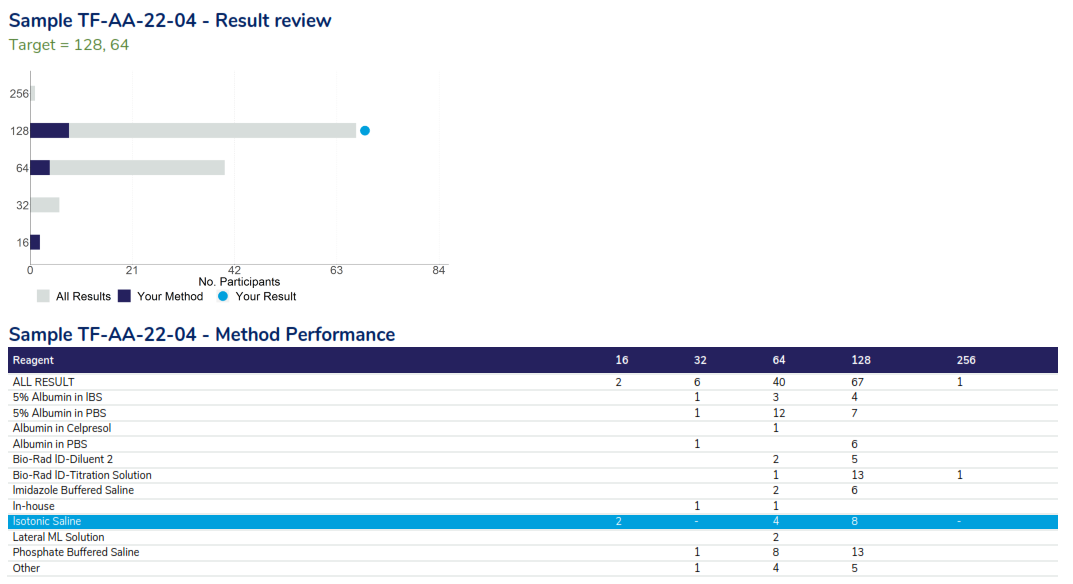

The Antibody Titre program target and acceptable range are determined by consensus, where titres above and below the target will be deemed as acceptable. In the case when a clear consensus cannot be demonstrated, the target value and acceptable range will be set by the Transfusion Advisory committee. Deviation of a survey target and acceptable range will be enunciated on the survey report.

The report is structured to provide the performance assessment, a review of the results returned, method performance, commentary and the participant’s cumulative assessment. An example of the result review page is provided below.

Antibody Titre result review – illustrating the responses received by all participants as a bar chart, highlighting peer group responses as dark blue on the bar chart and ‘your result as a light blue dot. The table below provides a summary of performance based on method.

The Transfusion Competency Program target and acceptable responses are determined by consensus. The final acceptable responses are at the discretion of the RCPAQAP Transfusion Advisory Committee. Each response is allocated a score which is shown on the report as well as a light blue circle indicating your result.

Performance assessment is based on a numerical scoring system each section of the report indicates the number of points accrued by the laboratory compared to the expected target score.

The report follows the standardised reporting structure, which provides the Performance summary, result review, Method comparison (if applicable), Commentary and Cumulative summary of the year’s performance.

|

Transfusion Competency Program – Performance Assessment System |

|||

| Survey Component | Points per Component (if applicable) | Total Points | |

| 1. | Patient Identification | 2 points | 8 points |

| 2. | Patient Blood Group Reactions | 2 points per mandatory reaction | 22 points |

| Patient Blood Group Result | 10 points | ||

| 3. | Donor Unit Blood Group Reactions | 2 points per mandatory reaction | 18 points per donor unit |

| Donor Unit Blood Group Result | 10 points | ||

| 4. | Patient DAT | 10 points | |

| 5. | Patient Antibody Screen | 10 points | |

| 6. | Patient Antibody Identification | 10 points | |

| 7. | Patient Phenotyping | 10 points | 30 points |

| Donor Units Phenotyping | 10 points per donor unit | ||

| 8. | Compatibility – Crossmatch Reaction | 10 points per donor unit | 20 points |

Acceptable responses for the Blood Group and Antibody programs are based on consensus. The final acceptable responses are at the discretion of the RCPAQAP Transfusion Advisory Committee. After the survey close date, the survey results are reviewed and assessed and participants are issued with a survey report that highlights the laboratory or individual performance. The report follows the standardised reporting structure, which provides the Performance summary, result review, Method comparison (if applicable), Commentary and Cumulative summary of the year’s performance. The guide to interpret the report can be found on the myQAP help page.

Performance is based on a numerical scoring system with points allocated for each correct answer (see table below). Survey reports provide Year to Date scores to allow participants to assess the ongoing performance of the laboratory or individual and to highlight areas requiring corrective action.

Blood Group and Antibody Program – Performance Assessment Scoring System

|

Blood Group & Antibody Program – Performance Assessment System |

|||

| Survey Component | Points per Component (if applicable) | Total Points | |

| 1. | Patient Identification | 2 points per correct identifier | 8 points |

| 2. | Patient Blood Group Reactions | 2 points for mandatory reaction, 10 points for blood group | 20 |

| 3. | Patient Antibody Screen | 10 points | |

| 4. | Patient Antibody Identification | 10 points | |

The survey objectives and acceptable responses for the Snake Venom program are reviewed and assessed against RCPAQAP Transfusion survey objectives and in consultation with a specialist Toxinologist to enable participant performance to be measured.

Reports follow the standardised format to illustrate survey results, including the Performance summary, Result review, Commentary and Cumulative summary that shows participant performance over the last three surveys.

The result review includes a distribution of laboratory results for the snake immunotype, clinical interpretation and reaction strength with your responses indicated by the light blue circle. An example of the result review page is provided below.

|

Snake Venom Detection Program Performance Assessment System |

|

| Test Component | Component Assessment |

| Snake Immunotype | Target Immunotype (Initial) |

| Clinical Interpretation | Most appropriate Clinical Response (Initial) |

Participants in the Snake Venom program are also asked to provide a revised interpretation of results based on supplied clinical information. This is not an assessable element of the survey however it is a useful educational tool to aid laboratory staff in understanding the clinical implications of reported findings.

Snake Venom – Result review page, illustrating the target responses in the table at the top of the page and charts illustrating the responses received from all laboratories. Results from the participant are highlighted as a blue dot.